Using deep learning to predict my budget categories

What are budget categories?

I like to tag every single financial transactions we make with a category that is both precise and useful:

- I use have categories for each theme I may want to understand more so that we cover the whole gamut of expenses (takeout, restaurant, dog/health, mortgage, etc.)

- I use subcategories to provide a different "zoom" level over some expenses. For example dog/health, dog/toy, dog/food, provide a detailed view but I often just coalesce those into "dog" when I am doing data rendering

- I create a category per business trip to make sure that the expense payback matches the money I spent on the trip

How I map transactions to categories?

To do that, I use a custom clojure DSL and tool to categorize transaction. It allows me to provide granular information about a charge to map to a category like. For example here is how I map a venmo transaction to the category car wash:

("date-description-amount"

"car/wash"

{:description "VENMO PAYMENT XX0702", :amount 220.0, :date "7/4/2022"})I also support an alternative syntax for venmo, here is a single venmo charge:

("venmo"

"food/takeout"

{:date "10/19/2021" :amount 25.0 :note "Pizza from https://www.lunitaspizza.com/"})And also lets me set rules that are generic, for example here is the rule for gas for the car that match on the merchant name:

("description%"

"car/gas"

#{"Arco" "Chevron" "Valero" "Union 76" "UNION 76" "SHELL" "Shell"})Over time I built a large list of categorization rules and every month I have less and less work to do.

Mapping new uncategorized transactions by hand

When I run the budget by hand, I generate config entries to map new transactions to existing categories:

("date-description-amount" "UNCATEGORIZED" {:description "RED O", :amount 120.68, :date "10/3/2022", :note "AUTOGENERATED"})

("date-description-amount" "UNCATEGORIZED" {:description "HUMBLEBUNDLE.COM SAN FRANCISCO CA", :amount 1.0, :date "10/1/2022", :note "AUTOGENERATED"})

("date-description-amount" "UNCATEGORIZED" {:description "APPLE ONLINE STORE CUPERTINO CA", :amount 42.02, :date "10/1/2022", :note "AUTOGENERATED"})

...I then decide to either replace "UNCATEGORIZED" with the right category for the entry or to instead grow an existing rule. In the case above I would add to the restaurant and book rules, but would map the unique apple store transaction to a category.

Mapping new uncategorized transactions with deep learning

What if it was easier to associate transactions to categories leveraging the existing mapping we put together? We can do that easily using a neural network:

from keras import layers

from keras.models import Sequential

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelBinarizer

import keras

import numpy as np

import pandas as pd

# Read transactions coming from the clojure system, at this point some of them are categorized

# some are yet to be categorized and they have the category "UNCATEGORIZED".

# The transactions are also deduped and are merged from all of our financial accounts

df = pd.read_csv("/Users/laurent/Dropbox/Budget/latest/output/September2022.csv")

# Separate categorized and uncategorized transactions

categorized = df[~df["category"].str.contains("UNCATEGORIZED")]

uncategorized = df[df["category"].str.contains("UNCATEGORIZED")]

# Count number of categories

unique_categories = df["category"].nunique() # 66

unique_categories_not_uncategorized = unique_categories - 1 # 65

# Separate features (merchant name only) and labels (category), training and test set

X = categorized["description"].values

Y = categorized["category"].values

X_Train, X_Test, y_train, y_test = train_test_split(X, Y, test_size=0.10, random_state=1000)

# Prepare input by vectorizing the merchant into

vectorizer = TfidfVectorizer(max_df=0.4, lowercase=True, stop_words="english", max_features=9000, ngram_range=(1,1))

vectorizer.fit(X_Train)

def to_float_32_array(x):

return np.array(x.toarray()).astype("float32")

X_train = to_float_32_array(vectorizer.transform(X_Train))

X_test = to_float_32_array(vectorizer.transform(X_Test))

X_valid = to_float_32_array(vectorizer.transform(uncategorized["description"].values))

# Prepare output by encoding it with one hot encoding:

encoder = LabelBinarizer()

encoder.fit(np.asarray(Y))

y_train = encoder.transform(y_train)

y_test = encoder.transform(y_test)

# Build and train the neural net

input_dim = X_train.shape[1] # Number of features

model = Sequential()

model.add(layers.Dense(512, activation="relu", input_dim=input_dim))

model.add(layers.Dense(unique_categories_not_uncategorized, name="Predictions", activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])

model.summary()

history = model.fit(X_train, np.asarray(y_train),

epochs=45,

verbose=True,

validation_data=(X_test, y_test),

batch_size=20)Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 512) 442368

Predictions (Dense) (None, 74) 37962

=================================================================

Total params: 480,330

Trainable params: 480,330

Non-trainable params: 0

_________________________________________________________________

Epoch 1/45

1/54 [..............................] - ETA: 7s - loss: 0.6917 - accuracy: 0.0000e+00

29/54 [===============>..............] - ETA: 0s - loss: 0.4794 - accuracy: 0.0310

54/54 [==============================] - 0s 3ms/step - loss: 0.3354 - accuracy: 0.0782 - val_loss: 0.0931 - val_accuracy: 0.1102

Epoch 2/45

1/54 [..............................] - ETA: 0s - loss: 0.0849 - accuracy: 0.1500

29/54 [===============>..............] - ETA: 0s - loss: 0.0757 - accuracy: 0.1569

54/54 [==============================] - 0s 2ms/step - loss: 0.0684 - accuracy: 0.1697 - val_loss: 0.0565 - val_accuracy: 0.2627

...

Epoch 44/45

1/54 [..............................] - ETA: 0s - loss: 0.0013 - accuracy: 1.0000

28/54 [==============>...............] - ETA: 0s - loss: 0.0011 - accuracy: 0.9786

54/54 [==============================] - 0s 2ms/step - loss: 0.0016 - accuracy: 0.9746 - val_loss: 0.0140 - val_accuracy: 0.9068

Epoch 45/45

1/54 [..............................] - ETA: 0s - loss: 1.2082e-05 - accuracy: 1.0000

27/54 [==============>...............] - ETA: 0s - loss: 0.0018 - accuracy: 0.9722

54/54 [==============================] - ETA: 0s - loss: 0.0015 - accuracy: 0.9746

54/54 [==============================] - 0s 2ms/step - loss: 0.0015 - accuracy: 0.9746 - val_loss: 0.0141 - val_accuracy: 0.9068So the network in 90.68% of the time will pick the same category as I did out of 65 different categories. If the model picked the most frequent categories (strongest naive model to establish a baseline) it would be 10.8% acurate:

import pandas as pd

df = pd.read_csv("/Users/laurent/Dropbox/Budget/latest/output/September2022.csv")

return "%.1f" % (100 * (df["category"].value_counts()[0] / df["category"].value_counts().sum())) + "%"10.8%

Therefore the model is performing far better than the baseline, great, how about taking it for a spin on uncategorized data?

predictions = encoder.inverse_transform(model.predict(X_valid))

uncategorized.loc[:,"category"]=predictions

predictions<Displays a list of uncategorized transactions labelled by the neural net>

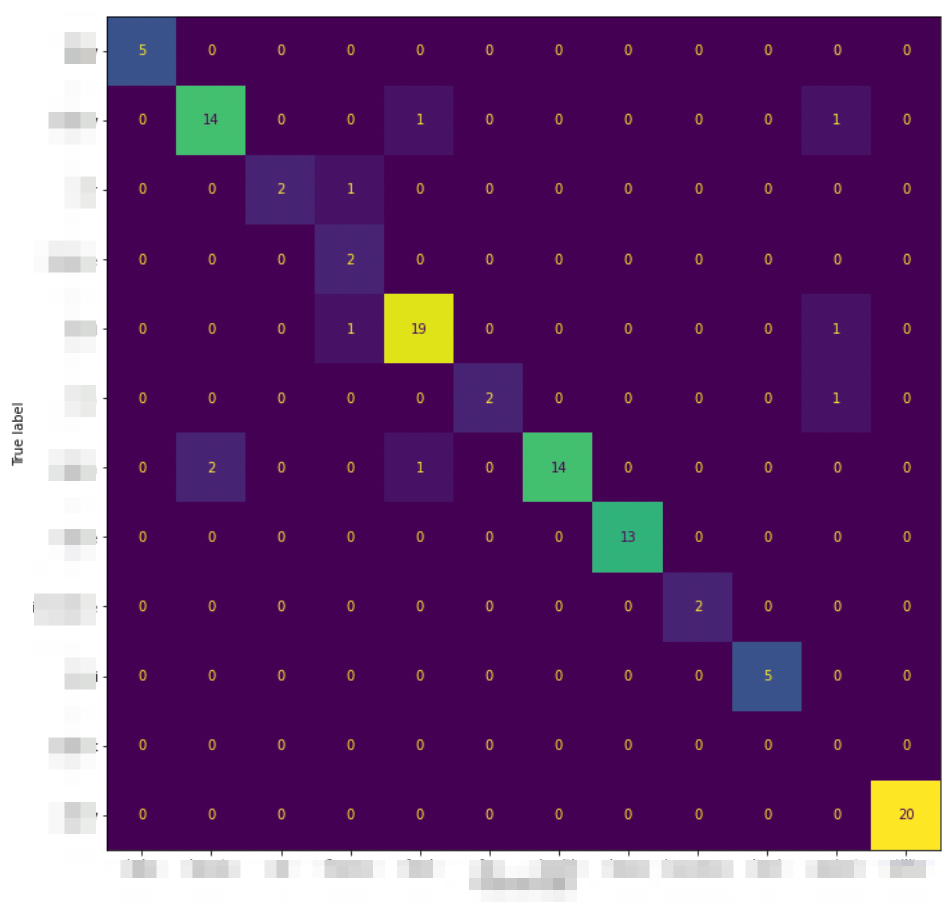

Plotting a confusion matrix to understand the model's performance

Finally we can look at what categories are confusing the model the most:

from sklearn.metrics import confusion_matrix

from sklearn.metrics import ConfusionMatrixDisplay

y_pred = encoder.inverse_transform(model.predict(X_test))

y_test = encoder.inverse_transform(y_test)

fig = disp.ax_.get_figure()

fig.set_figwidth(14)

fig.set_figheight(14)

NOTE: I hid the labels here for privacy reason.

This helped me identify for example that trip and food and the most confused category. That's not surprising as the difference between eating out and a trip is not encoded in the merchant name so much than it is in the time of the transaction (which the model does not know about).