Building with LLMs at Scale: Part 3 - Higher-Level Abstractions

In Part 1 I described the pain points of working with multiple LLM sessions. Part 2 covered the ergonomics layer that made individual sessions manageable.

But ergonomics alone isn’t enough when you’re running 5-10 parallel Claude sessions. You need coordination, quality enforcement, and shared context. This article covers the higher-level abstractions that make LLM teams actually work.

The Smoke Test Paradigm: Designing Software for Rapid Iteration

Here’s the key insight: software design principles that help human developers also help LLMs. The same things that trip up human coders—complex interfaces, tight coupling, unclear contracts—trip up LLMs too.

When building software that LLMs will write and modify, the classic principles still apply:

- Modular code: Small, well-defined components

- Simple interfaces: Clear inputs and outputs

- Loose coupling: Changes in one area don’t cascade

- Fast feedback: Know immediately when something breaks

The difference is velocity. LLMs can iterate 10x faster than humans—but only if the feedback loop is tight. That’s where smoke tests become critical.

Why Smoke Tests Over Unit Tests?

I tried comprehensive unit test suites. They worked, but the overhead was crushing:

- Writing tests took longer than writing features

- Tests became brittle as code evolved

- Mocking and fixtures added complexity

- False positives made me ignore failures

The problem: unit tests are designed for human-paced development. When Claude can refactor an entire module in 30 seconds, waiting 5 minutes for a full test suite kills momentum.

Instead, I adopted smoke tests: simple, end-to-end checks that verify the system works. Run in seconds. Clear pass/fail. No ambiguity.

Example from my flashcards project (test/smoke_test.sh):

#!/bin/bash

# Smoke test: Does the basic workflow work?

# Create a flashcard

./flashcards create \

--question "What is 2+2?" \

--answer "4" \

--project "math"

# Get quiz items

./flashcards quiz --limit 1 | grep "What is 2+2?"

# Review it

./flashcards review <id> --confidence 5

# Check it's in the list

./flashcards list | grep "What is 2+2?"

echo "✅ Smoke test passed!"

That’s it. No mocking. No fixtures. No complex assertions. Just: Does it work end-to-end?

The Make Test Convention

Every project has a Makefile with a test target:

test:

@echo "Running smoke tests..."

@./test/smoke_test.sh

@echo "✅ All tests passed"

Claude knows this convention. After every code change, it automatically runs make test. If tests fail, Claude must fix them before continuing.

This simple pattern has caught hundreds of regressions. Claude refactors a function? Tests catch it. Claude renames a variable? Tests catch it. Claude adds a feature? Tests verify it.

Why This Works

Smoke tests have unique advantages for LLM workflows:

- Fast: Run in seconds, not minutes

- Clear failures: “Command failed” is unambiguous

- Self-documenting: Reading the test shows how the system should work

- Easy to maintain: When features change, tests are obvious to update

- Catches real issues: Integration problems that unit tests miss

The trade-off: you don’t get fine-grained coverage. But in my experience, that’s fine. I’d rather have 90% confidence in 5 seconds than 99% confidence after 5 minutes of test runs.

Memento: Shared Context Between Sessions

The core challenge of parallel LLM sessions: they don’t know about each other.

Session A refactors the authentication system. Session B adds a new feature that uses authentication. Session A’s changes break Session B’s code—but Session B has no idea until tests fail.

I needed a shared knowledge base. Enter memento.

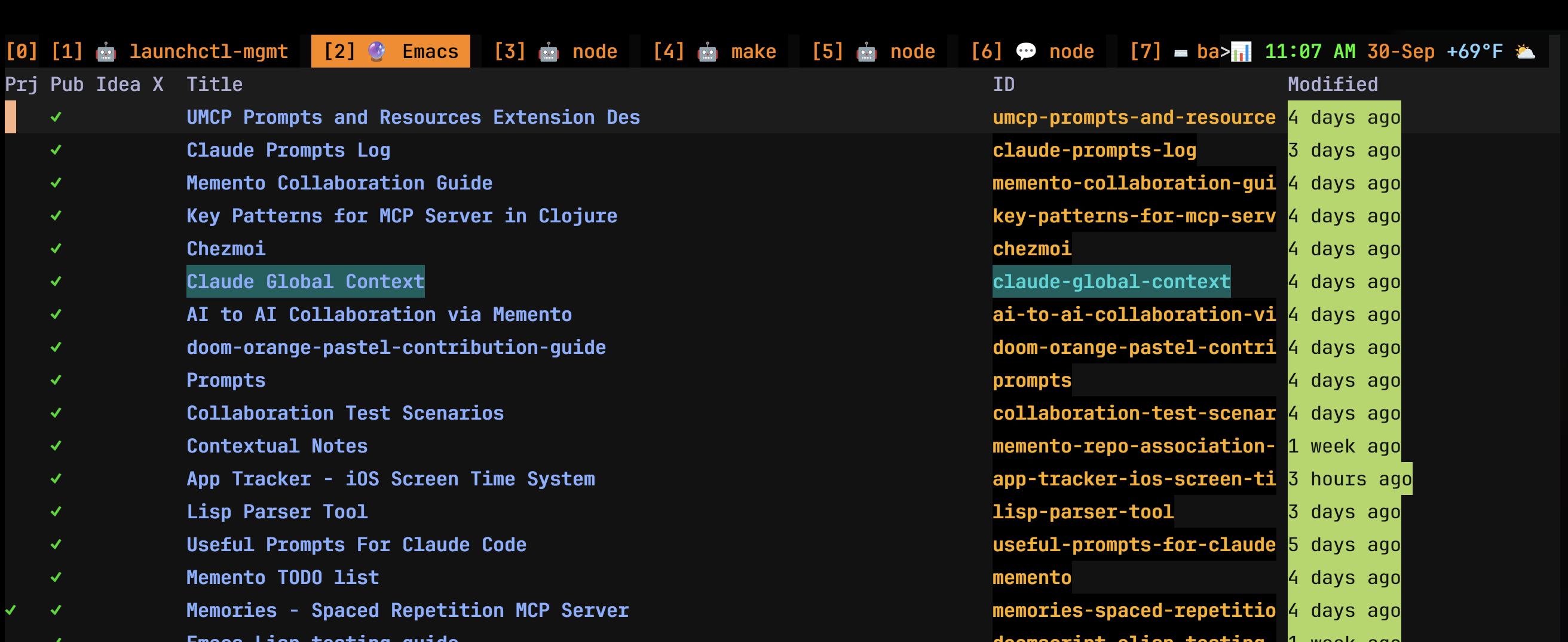

What Is Memento?

Memento is my note-taking system built on org-roam, which implements the Zettelkasten method for networked thought. I expose it to Claude via MCP (Model Context Protocol).

Think of it as a shared brain for all Claude sessions—a personal knowledge graph where notes link to each other, concepts build on each other, and every LLM session can read and contribute to the collective knowledge.

Key features:

- Public notes tagged with

PUBLICare accessible via MCP - Searchable with full-text search

- Structured with org-mode properties and tags

- Version controlled in git

- Persistent across sessions

The Global Context Pattern

Every Claude session starts by reading the claude-global-context note:

;; Automatically loaded by Claude at session start

(mcp__memento__note_get :note_id "claude-global-context")

This note contains:

- My coding preferences

- Project structure

- Common pitfalls

- Tools available (memento, MCP servers, custom scripts)

- Reminders (never access

~/.roamdirectly, always use MCP)

As I discover patterns, I add them to this note. Every future Claude session gets that knowledge automatically.

Example from my global context:

## 🧪 Testing Approach:

- Write tests for new features

- Rely on smoke tests for projects (trigger with `make test`)

- **Whenever all tests pass after a change, make a commit with a descriptive message**

## 🔧 ELISP DEVELOPMENT WITH DOOMSCRIPT:

See the note tagged `elisp` for patterns and testing approaches

Session-Specific Context

For complex projects, I create dedicated notes:

memento-clojure-patterns: Clojure idioms and anti-patternsappdaemon-testing-guide: How to test Home Assistant automationsmcp-server-patterns: How to build reliable MCP servers

When Claude works on these projects, I explicitly reference the notes:

Read the note `mcp-server-patterns` and apply those patterns

to this new server implementation.

Claude reads the note, absorbs the context, and applies it. The next Claude session working on the same project does the same thing—they’re building on shared knowledge.

Coordination Patterns (Experimental)

I’m experimenting with explicit coordination notes for parallel sessions:

# working-on-memento-refactor

## Current State

- Session A: Refactoring CLI argument parsing (IN PROGRESS)

- Session B: Adding new `bulk-update` command (WAITING)

- Session C: Updating tests (COMPLETED)

## Decisions Made

- Use argparse instead of manual parsing (Session A, 2025-09-28)

- All commands must support JSON output (Session B, 2025-09-27)

## Upcoming Work

- [ ] Migrate all commands to new arg structure

- [ ] Add integration tests

- [ ] Update documentation

Each session reads this note before starting work. Session A updates its status when done. Session B sees that and can proceed safely.

This is informal right now—I’m still exploring better patterns. Some ideas:

- Barrier functionality: Session B blocks until Session A completes

- Lock mechanism: Only one session can modify a file at once

- Dependency tracking: Session C depends on Session A and Session B

I’m considering building an MCP server specifically for project coordination. Something like:

# Hypothetical coordination MCP server

mcp_coordinator.claim_file("src/parser.py", session_id="A")

# Other sessions get an error if they try to edit it

mcp_coordinator.add_barrier("refactor-complete", required_sessions=["A", "B"])

mcp_coordinator.wait_for_barrier("refactor-complete") # Blocks until A and B finish

The Supervisor Pattern: Orchestrating LLM Teams

When I need major changes, I run multiple Claude sessions in parallel:

- Session A: Implements feature X

- Session B: Writes tests for feature X

- Session C: Updates documentation

- Session D: Reviews changes from A, B, and C

This is the supervisor pattern—but instead of manually coordinating, I use an LLM to generate prompts for other LLMs.

The Meta-LLM Approach

Here’s the key insight: planning parallel work is itself an LLM task. So I have Claude generate the work breakdown and individual prompts:

- I describe the goal to a planning session: “Implement feature X with tests and docs”

- The planner LLM creates:

- A work plan broken into phases (represented as a DAG)

- Individual prompt files for each parallel task

- Memento-based coordination scheme

- A supervisor prompt for monitoring progress

- I review and launch using my automation tools

This meta-approach scales much better than manual coordination. The planner understands dependencies, estimates complexity, and generates consistent prompt structures.

The Tooling: claude-parallel

I built claude-parallel to automate the workflow:

# Step 1: Generate the plan

claude-parallel plan -P myproject -p "requirements.txt"

# This launches a planning Claude session that:

# - Breaks work into phases and tasks

# - Creates prompt files in ~/.projects/myproject/prompts/

# - Generates plan.json with the dependency DAG

# - Creates a supervisor.txt prompt for monitoring

# Step 2: Dispatch work to parallel sessions

claude-parallel dispatch -p prompts/phase-1-task-auth.txt src/auth.py

claude-parallel dispatch -p prompts/phase-1-task-tests.txt tests/test_auth.py

The dispatch command automatically:

- Creates a new tmux window

- Changes to the file’s directory

- Launches Claude with the prompt

- Monitors completion via memento notes

Tmux Automation

For complex projects with many parallel sessions, I use generate_tmuxinator_config:

# Generate tmuxinator config from prompt files

generate_tmuxinator_config -n myproject prompts/*.txt > ~/.config/tmuxinator/myproject.yml

# Launch all sessions at once

tmuxinator start myproject

This creates a tmux session with:

- One window per prompt file

- Proper window naming for easy navigation

- All sessions starting in the correct directory

How I Do It Today

- Write high-level requirements in a text file

- Run

claude-parallel planto generate work breakdown - Review the generated prompts (adjust if needed)

- Launch sessions via

claude-parallel dispatchortmuxinator - Use memento for coordination (automatically set up by the planner):

- Sessions read/write status notes

- Sessions check phase completion before starting

- Blocker notes communicate issues

- Rely on smoke tests to catch integration issues

- Monitor via tmux status indicators (see Part 2) or run the supervisor prompt

Persona-Driven Architecture

Assigning roles to sessions improves output quality, but I use personas differently than you might expect.

I use Robert C. Martin (Uncle Bob) as the planner and architect. When breaking down a complex feature into parallel tasks, I ask the planner session:

You are Robert C. Martin (Uncle Bob). Review this feature request and break it

down into clean, well-separated tasks for parallel implementation. Focus on

SOLID principles and clear interfaces between components.

This gives me a work breakdown that follows clean architecture principles: small, focused components with clear responsibilities.

Then for the worker sessions (the ones actually implementing the tasks), I experiment with different prompts. Sometimes specific personas help:

- “You are obsessed with performance and correctness” for algorithm-heavy code

- “You are paranoid about edge cases and defensive programming” for input validation

- “You value simplicity above all else, avoid any unnecessary complexity” for utility functions

Other times, I just use the task description from the planner without additional persona framing. I’m still experimenting with what works best for different types of work.

What’s Missing

Current gaps in my supervisor pattern:

- No automatic conflict detection: I manually ensure sessions don’t edit the same files

- No rollback mechanism: If Session A breaks tests, I manually revert

- No progress tracking: I eyeball tmux windows instead of having a dashboard

- No automatic merging: I manually integrate changes from parallel sessions

These are ripe for automation. The MCP coordination server would solve 1-3. Number 4 might need a specialized “merger” session that reads changes from all other sessions and integrates them.

Knowledge Accumulation Over Time

Traditional LLM conversations are ephemeral. Each session starts fresh. But with memento, knowledge compounds.

Example workflow:

- Week 1: I discover that MCP servers should validate input strictly

- I add to global context: “MCP servers must validate all inputs and return clear error messages”

- Week 2: Claude builds a new MCP server, automatically applies that pattern

- Week 3: I discover another pattern (connection pooling), add it to global context

- Future sessions: Apply both patterns automatically

Over months, my global context evolved from 50 lines to 500+ lines of hard-won knowledge. New Claude sessions are more productive from day one.

The Memento Notes Index

To make knowledge discoverable, I maintain a memento-notes-index:

## Development & Technical Guides

- **mcp-server-patterns**: Patterns for building reliable MCP servers

- **smoke-test-paradigm**: Why smoke tests work better than unit tests

- **elisp-testing-guide**: Fast testing with doomscript

- **code-review-guide**: How to review code and log issues for AI

## Quick Lookup by Use Case

- Building MCP servers → `mcp-server-patterns`

- Emacs development → `elisp-testing-guide`

- Testing frameworks → `smoke-test-paradigm`

When Claude asks “How should I structure this?”, I can say: “Check the notes index for relevant guides.”

Key Learnings

After 6 months of parallel LLM workflows:

Smoke tests are a game-changer. They catch 90% of issues with 10% of the effort of comprehensive test suites.

Shared context is essential. Without memento, each session reinvents the wheel. With it, knowledge compounds.

Personas improve output quality. “Be Uncle Bob” consistently produces cleaner code than “refactor this.”

Informal coordination works at small scale. For 3-5 parallel sessions, a shared note is enough. Beyond that, I’ll need real tooling.

Every discovery should be captured. If I solve a problem once, I never want to solve it again. Write it down in memento.

What’s Next

The patterns in this article work but aren’t fully automated. I’m manually coordinating sessions, manually managing shared context, manually merging changes.

Part 4 covers experiments and works-in-progress: the project explorer tool, Emacs integration for code review, diff workflows, and ideas that didn’t quite work out.

Part 5 shifts to learning: using Claude to generate flashcards, worksheets, and annotated code for studying complex topics.

The memento system is open source at github.com/charignon/memento. The global context patterns are in my CLAUDE.md. The flashcards smoke tests are at github.com/charignon/flashcards.